process.annotator

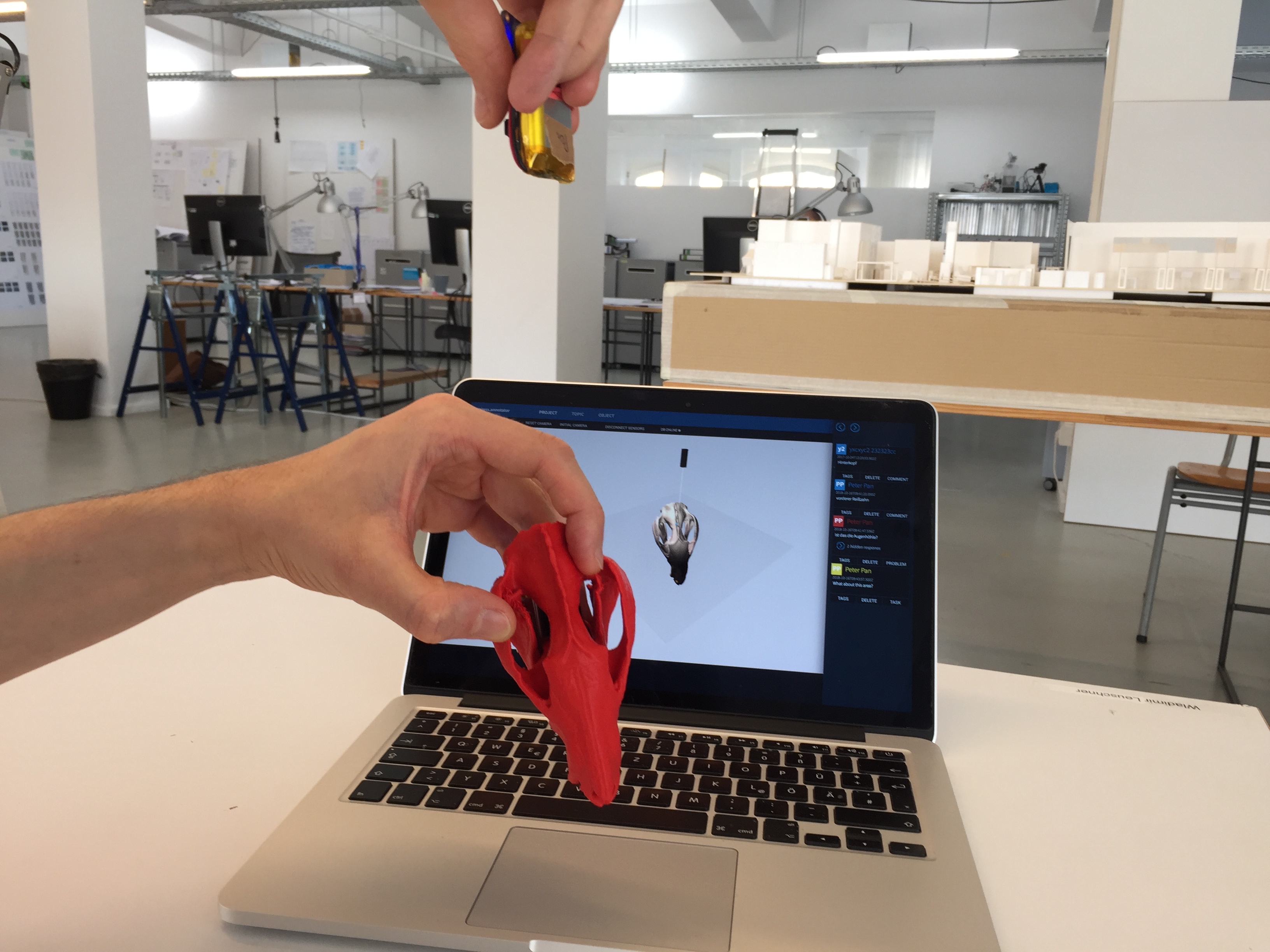

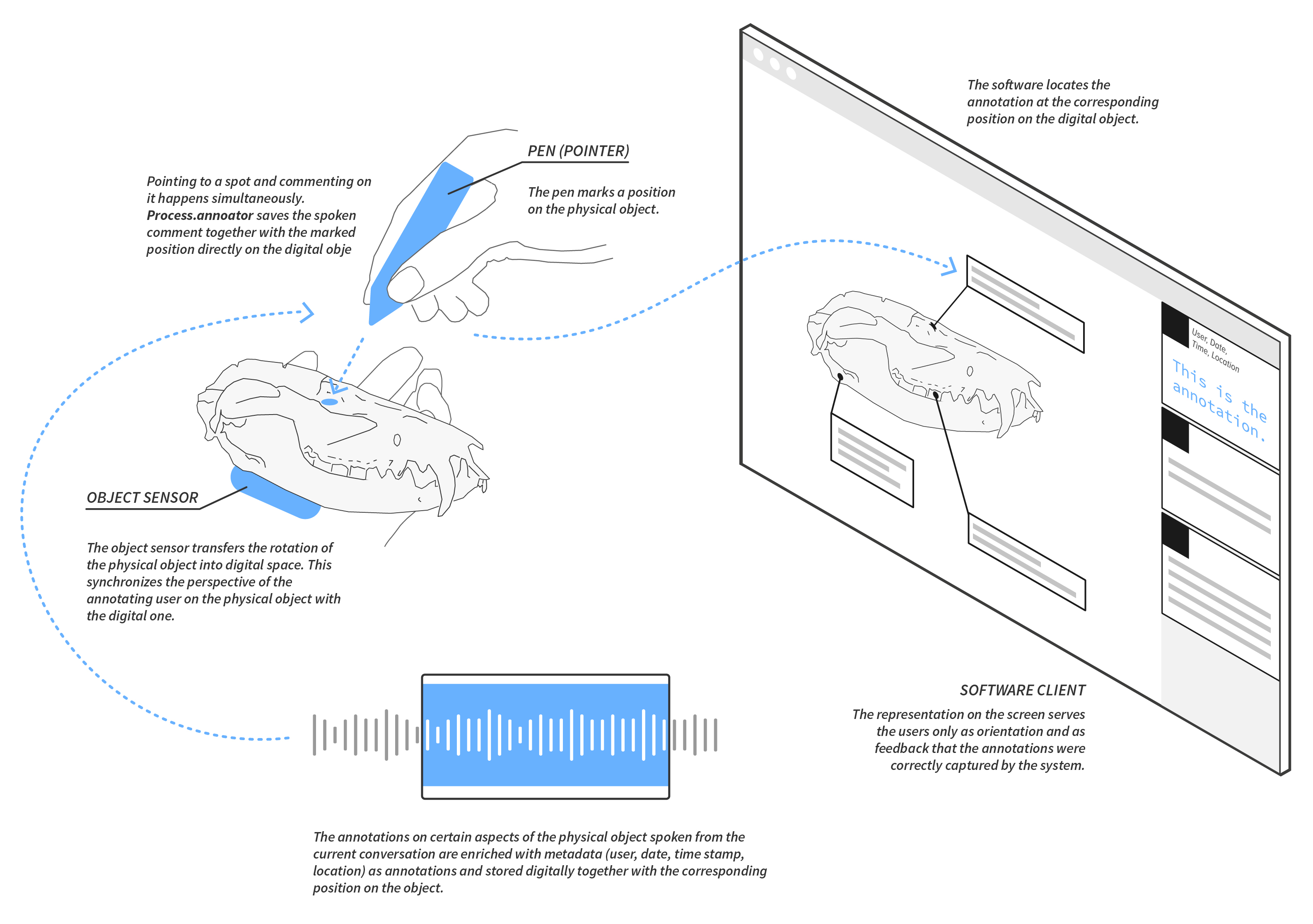

is a tool to record tangible interactions with physical artifacts and map them as annotations onto their virtual counterparts in real time. The project deals with questions around object-centered collaborative work settings in the context of research and development

process.annotator

is a tool to record tangible interactions with physical artifacts and map them as annotations onto their virtual counterparts in real time. The project deals with questions around object-centered collaborative work settings in the context of research and development

The project focuses on collaborative and object-centered work situations and especially on face-to-face meetings that are still hard to replace by any other form of remote meeting situations like video conferences or e.g. Skype calls.

But what’s so special about face-to-face meetings? And what happens when physical objects are at the center of conversation?

Following Donald Schön a professional conversational situation can be described as reflective in the way that the involved physical objects are “talking back” and begin to structure the whole communication. Because the persons tend to refer permanently to certain details of the object by pointing with the finger to these details.

And this pointing gesture has not only the purpose to replace spoken language.

In fact, as Michael Tomasello has described it: This gesture establishes a certain relation between the speaker, the object and other persons. The visual gesture combined with the object and the persons form the basis for building a common knowledge background.

Process.annotator allows to connect statements physically to the object during a conversation so that these objects become a digitally represented anchor for the communication and the whole work process.

The project focuses on collaborative and object-centered work situations and especially on face-to-face meetings that are still hard to replace by any other form of remote meeting situations like video conferences or e.g. Skype calls.

But what’s so special about face-to-face meetings? And what happens when physical objects are at the center of conversation?

Following Donald Schön a professional conversational situation can be described as reflective in the way that the involved physical objects are “talking back” and begin to structure the whole communication. Because the persons tend to refer permanently to certain details of the object by pointing with the finger to these details.

And this pointing gesture has not only the purpose to replace spoken language.

In fact, as Michael Tomasello has described it: This gesture establishes a certain relation between the speaker, the object and other persons. The visual gesture combined with the object and the persons form the basis for building a common knowledge background.

Process.annotator allows to connect statements physically to the object during a conversation so that these objects become a digitally represented anchor for the communication and the whole work process.

Technical background:

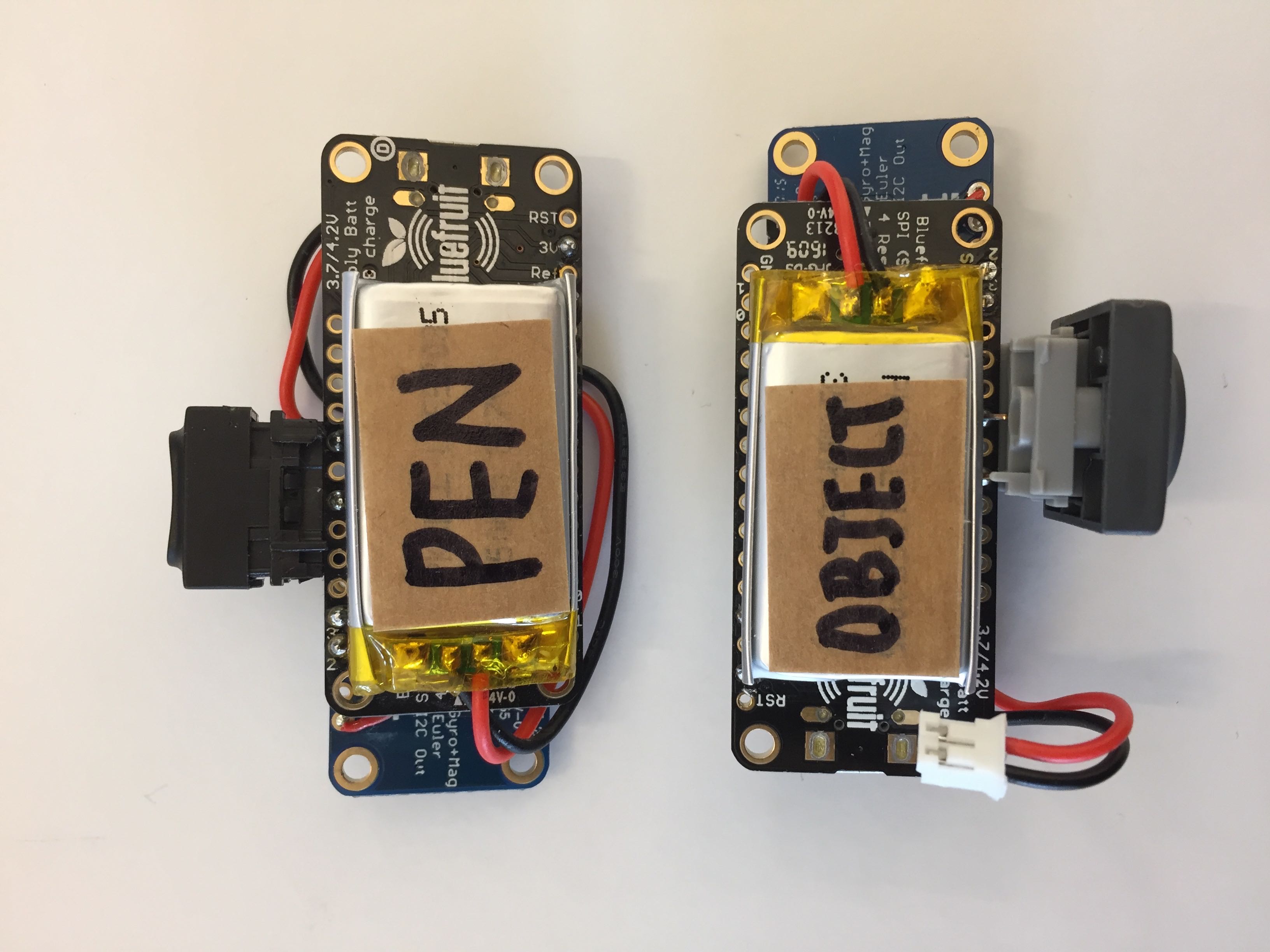

Annotating a physical object with (1) two hardware devices (Adafruit Feather Bluefruit LE Micro Board), each featuring an orientation sensor (BNO055 9-DOF absolute orientation sensor) which detects the rotations of the physical object and of the pointing device, respectively. (2) A graphical software tool which captures annotation activities and displays them on the digital object. The software tool is built as a native application using the Electron framework. It utilizes web technologies like Polymer, three.js and pouchdb. (3) A server backend (Node.js) connected to a database (CouchDB). It stores the live sessions as well as all object and annotation data, and provides an application programming interface for integrating external data sources.

Technical background:

Annotating a physical object with (1) two hardware devices (Adafruit Feather Bluefruit LE Micro Board), each featuring an orientation sensor (BNO055 9-DOF absolute orientation sensor) which detects the rotations of the physical object and of the pointing device, respectively. (2) A graphical software tool which captures annotation activities and displays them on the digital object. The software tool is built as a native application using the Electron framework. It utilizes web technologies like Polymer, three.js and pouchdb. (3) A server backend (Node.js) connected to a database (CouchDB). It stores the live sessions as well as all object and annotation data, and provides an application programming interface for integrating external data sources.

Anouk Hoffmeister: Design & Concept

Tom Brewe: Software Development

Sebastian Zappe: Hardware Development

This concept was developed within the research project Hybrid Knowledge Interactions at the Cluster of Excellence Image Knowledge Gestaltung (Humboldt Universität zu Berlin, 2015-2018).

Publication

Hoffmeister, A., Berger, F., Pogorzhelskiy, M., Zhang, G., Zwick, C. & Müller-Birn, C., (2017). Toward Cyber-Physical Research Practice based on Mixed Reality. In: Burghardt, M., Wimmer, R., Wolff, C. & Womser-Hacker, C. (Hrsg.), Mensch und Computer 2017 - Workshopband. Regensburg: Gesellschaft für Informatik e.V..

Anouk Hoffmeister: Design & Concept

Tom Brewe: Software Development

Sebastian Zappe: Hardware Development

This concept was developed within the research project Hybrid Knowledge Interactions at the Cluster of Excellence Image Knowledge Gestaltung (Humboldt Universität zu Berlin, 2015-2018).

Publication

Hoffmeister, A., Berger, F., Pogorzhelskiy, M., Zhang, G., Zwick, C. & Müller-Birn, C., (2017). Toward Cyber-Physical Research Practice based on Mixed Reality. In: Burghardt, M., Wimmer, R., Wolff, C. & Womser-Hacker, C. (Hrsg.), Mensch und Computer 2017 - Workshopband. Regensburg: Gesellschaft für Informatik e.V..